When ChatGPT Becomes a Crutch: ADHD, Productivity and the Risk of AI Overdependence

He runs his own business, is 44 and has ADHD. His ChatGPT Wrapped shows he’s in the top 0.3% of users worldwide — a badge of productivity that can hide a quieter problem: when every little decision and admin task routes through a chatbot, are we outsourcing our ability to plan and decide?

Quick checklist

- Ask: “What gap is this tool filling for you?”

- Spot red flags: constant reliance for basic planning, anxiety if the tool is unavailable, reduced practice of core tasks.

- Try a 1-week experiment: one AI-free planning hour per day, plus specialist apps for domain tasks.

- For leaders: implement audit logs, role‑based AI use, and periodic “AI off” drills to preserve skills.

Why ChatGPT and AI agents help people with ADHD

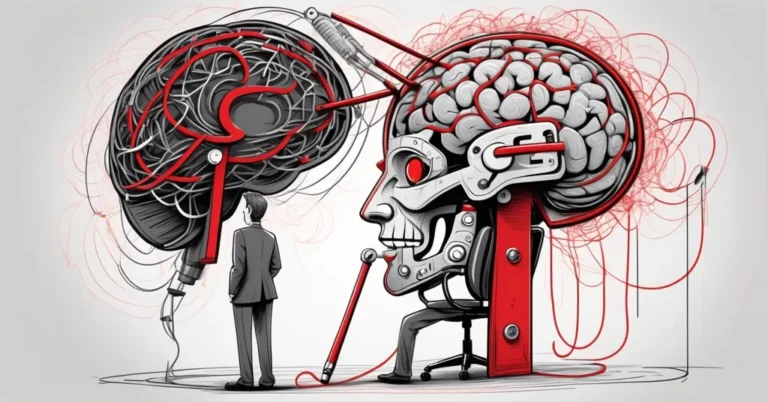

For many people with ADHD — where executive function (planning, task initiation, working memory and self‑monitoring) is a challenge — generative AI can be a force multiplier. Conversational AI agents like ChatGPT make it easy to structure thoughts, turn vague goals into step‑by‑step plans, draft emails, and automate repetitive admin. That’s AI for productivity at its simplest: lowering friction so work actually gets done.

“Scaffold” is a useful metaphor: AI provides temporary structure that helps someone perform tasks they’d otherwise struggle to start or sustain. When used intentionally, that scaffold can expand capability. But scaffolds are meant to be removed once the building stands — problems appear when the scaffold becomes the building.

Signs of ChatGPT overdependence

Overdependence (relying on AI to do tasks that should exercise human judgment, or to provide emotional validation) looks different from productive augmentation. Look for these observable markers:

- Frequent queries for basic planning tasks (e.g., “what time should I leave?” or “write my to‑do list”) several times per day.

- Declining ability to complete routine tasks without the AI present.

- Visible anxiety or distress when ChatGPT is unavailable or yields unpredictable answers.

- Using ChatGPT for tasks where specialist tools or human judgment are more accurate (checking train times with ChatGPT instead of a transit app).

- Repeatedly seeking reassurance or “validation” from the chatbot on personal decisions.

- Workplace risks: a small number of people become single points of failure because they alone coordinate AI-driven workflows.

Measurable indicators for teams: frequency of basic queries per user per week, drop in employee task completion when AI access is removed for an hour, and number of incidents caused by relying on generic AI answers for domain‑specific tasks.

Dr Stephen Blumenthal warns we may be seeing the start of a pattern he calls “chatbot overdependence syndrome” — where tools intended as aids become our default decision‑makers.

How to talk to a partner about AI use

Approach from curiosity, not accusation. People often lean on AI because it soothes underlying anxiety or compensates for overwhelm. A calm, short conversation does more than nagging or ultimatums.

Three-step conversational script (two versions)

- Open with curiosity:

“I’ve noticed you use ChatGPT a lot — I’m curious what it does for you. What gaps is it filling?” - Reflect needs and offer alternatives:

“If it’s helping reduce stress about planning, could we try one hour a day where you use a checklist or a specialist app instead, and compare how that feels?” - Collaborate and reassure:

“You’re great at getting things done — this is about finding a balance so you keep those skills and feel less anxious when the tool isn’t there.”

Shorter alternative phrasing for tense moments:

- “Help me understand what the AI does that makes your day easier.”

- “Would you try a small experiment with me — one AI‑free planning session this week?”

Case study: Rebalancing AI use (practical example)

Tom runs a small consultancy and was in the top percentile of ChatGPT users. He leaned on it to write client emails, draft invoices, and decide logistics. On paper his output looked good, but he felt more anxious and noticed he hesitated when the internet was down. His partner suggested a two‑week experiment: replace ChatGPT for scheduling and travel queries with specialist apps (calendar, Trainline) and reserve ChatGPT for creative brief drafts.

Week one: Tom struggled initially—he missed the instant reassurance the chatbot provided and reverted to it a few times. With a simple checklist and a 10‑minute morning planning ritual, he gradually regained confidence making small, everyday choices on his own.

Week two: productivity remained similar, but Tom reported less anxiety when offline and felt better about his competence. He also identified specific tasks where AI saved time (drafting proposals) and where it introduced risk (using the chatbot to verify flight times). By the end of the experiment, Tom codified a rule: use ChatGPT for ideation and draft work, use verified specialist tools for factual or time‑sensitive tasks, and perform at least one “manual” planning session per day.

Outcome: slightly higher perceived autonomy, no loss in output quality, and a clearer division of labor between human skills and AI automation.

AI for business: preserving skills while gaining efficiency

Organizations face the same human problem at scale. AI automation can dramatically boost throughput, but when teams stop practicing core skills, institutions become brittle. “Governance blind spots” are exactly that: areas where no one retains the knowledge to act if the AI fails.

Leaders should treat AI agents as amplifiers whose use must be governed. Practical measures include:

- Pilot policy: Start small, define which tasks are appropriate for generative AI and which require human sign‑off.

- Audit trails: Keep records of AI outputs tied to users and decisions so you can trace back and learn when errors occur.

- Role‑based access: Limit advanced AI capabilities to trained staff and retain manual fallback procedures.

- Skill‑preservation drills: Schedule periodic “AI off” sessions where teams perform tasks without automation.

- Environmental and cost metrics: Track usage volume and prefer lighter models for routine queries; batch non‑urgent requests to reduce compute.

- Shadowing and handover: Require documentation of AI-assisted processes and a human to demonstrate the task end‑to‑end at intervals.

Decision framework: ChatGPT vs specialist app

Ask these four questions before turning to a generative AI agent:

- Accuracy required: Is this task fact‑sensitive or time‑critical (use specialist apps)?

- Auditability: Do you need to trace and explain the decision later (prefer systems with logs)?

- Privacy and compliance: Is sensitive data involved (avoid public models or use enterprise controls)?

- Skill maintenance: Will using AI here remove practice opportunities for your team or yourself?

Small experiments to rebalance use

- AI‑free planning hour: One dedicated hour each day to plan with checklists, calendars, and human judgment.

- Skill sprints: Weekly tasks performed without AI to keep core competencies sharp.

- Shadow sessions: Have someone replicate an AI‑generated workflow manually to confirm understanding.

- Batching queries: Group non‑urgent AI requests to reduce energy use and encourage deliberation.

Environmental note

Running large models consumes energy. The footprint of everyday personal use is not zero. Mitigation is practical: choose smaller or more efficient models for routine tasks, batch non‑urgent requests, and prefer providers that publish energy or carbon metrics when possible. For organizations, include compute and sustainability in the ROI calculus for AI deployments.

Key takeaways and questions

-

Is heavy everyday use of ChatGPT potentially harmful?

Yes. Generative AI can boost productivity but becomes harmful when it replaces essential planning and decision practice, or serves as emotional validation instead of human support.

-

Does ADHD make someone more likely to rely on AI?

Yes. Executive‑function challenges make conversational AI attractive because it reduces friction in planning and task initiation. That makes thoughtful boundaries even more important.

-

How should partners raise concerns?

Ask with curiosity about what the tool is providing, avoid lecturing, and propose small, time‑bound experiments that preserve autonomy while keeping the productive benefits of AI.

-

What should leaders do when rolling out generative AI?

Adopt governance: pilot policies, audit logs, role limits, skill‑preservation drills and environmental tracking — treat AI as augmentation, not a substitute for human judgment.

ChatGPT and other AI agents open new possibilities for productivity and accessibility — especially for people with ADHD. The task for partners and leaders is to harvest those benefits while preventing the slow erosion of independent skills. Curiosity, clear rules and small experiments will do more to restore balance than blame. If you want help crafting a one‑page partner script or a leader checklist to deploy in your team, those are practical next steps that make the conversation concrete.