Multi‑Agent Video Analysis with Strands, Llama 4, and Amazon Bedrock

TL;DR: Break large video workloads into a team of specialized AI agents to gain scalability, auditability, and better failure isolation. This pattern pairs Strands Agents SDK with Meta’s Llama 4 (Scout/Maverick) on Amazon Bedrock, OpenCV for frame extraction, and S3/SageMaker for staging and experimentation. Start with a small pilot to measure accuracy, latency, and cost; then iterate with domain models, observability, and provenance controls.

Who should read this

- Security, operations, and surveillance teams exploring automated incident triage.

- Media and content teams that need searchable metadata and narrative summaries for hours of footage.

- Industrial engineers wanting temporal analysis across sensor and camera feeds.

- Engineering leaders deciding whether to use multi‑agent systems or a single multimodal LLM.

Problem and business value

Raw video is dense, expensive to review manually, and often time-sensitive. A multi‑agent pipeline compresses hours of footage into structured events and human-friendly summaries, reducing analyst time and surfacing actionable anomalies. Rather than asking one model to do everything, split responsibilities: frame extraction, visual detection, temporal reasoning, and narrative summarization. This lets teams tune each component—scale the vision models, tighten validation rules, and control provenance of results for audits and compliance.

“Multi-agent systems distribute specialized capabilities so a team of models can scale, tolerate failure, and adapt to changing goals.”

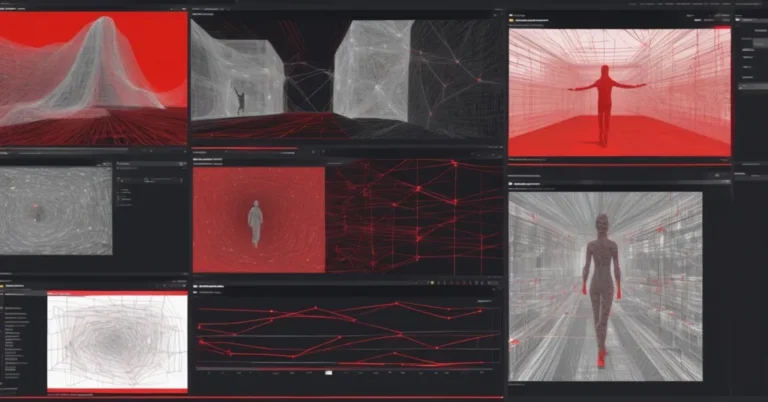

Architecture overview

The reference pattern uses a coordinator agent (a Llama 4 instance hosted on Bedrock) to orchestrate a set of specialized agents. Agents are exposed as callable tools so one agent can invoke another and consume exact JSON outputs. Core infrastructure components are Amazon Bedrock/AgentCore, SageMaker for notebooks, S3 for staging, OpenCV for frame extraction, and Gradio for quick demos.

- Coordinator agent (Llama 4 on Bedrock): orchestrates work, validates schemas, and creates final summaries.

- Frame extraction agent (OpenCV): samples and stages frames to S3.

- Visual analysis agent (vision model): detects objects, scenes, and attributes, returning structured JSON.

- JSON retrieval agent: pulls analysis blobs from S3 for coordinator reasoning.

- Temporal analysis agent: turns frame-level observations into events and timelines.

- Summary generation agent (LLM): produces narrative summaries for human triage.

Design principle: use a linear coordinator flow—call one tool, consume its JSON, then move to the next step. That minimizes ambiguous prompt state and makes the pipeline auditable.

“Agents as Tools” is a pattern where individual agents are exposed as callable functions that other agents can invoke to build complex workflows.

Why Llama 4 Scout / Maverick matter

Ultralong context windows change tradeoffs. Scout advertises a headline 10M token window and Maverick a 1M token window. Managed environments impose practical limits (for example, a ~3.5M usable cap for Scout via Bedrock in some examples). Even with conservative limits, being able to reason across thousands to millions of tokens reduces brittle chunking logic and enables cross‑video, cross‑document reasoning inside the coordinator agent.

Implementation walkthrough (high level)

- Provision Bedrock/AgentCore and a SageMaker notebook. Register model IDs for Llama 4 variants (example used: us.meta.llama4-maverick-17b-instruct-v1:0 with temperature 0 for deterministic reasoning).

- Stage video files to an S3 bucket or a SageMaker volume accessible to your notebook.

- Run the frame extraction agent (OpenCV) to sample key frames and upload images to S3.

- Call the visual analysis agent (could be an off‑the‑shelf vision model or a domain-tuned detector). Output MUST conform to a structured JSON schema so later agents can validate and reason against it.

- The coordinator pulls the JSON with the retrieval agent, runs temporal analysis over sequences, and then asks the summary generator for a narrative. Each step is logged in Bedrock AgentCore for observability and traceability.

- Write final artifacts back to S3 (e.g., s3://your-bucket/results/

/summary.json) and attach provenance metadata.

Example JSON schema (visual analysis output)

{

"frame_id": "frame-0001",

"timestamp": "2026-01-15T10:01:23Z",

"objects": [

{"label": "person", "bbox": [102,34,64,180], "confidence": 0.96},

{"label": "truck", "bbox": [420,210,300,150], "confidence": 0.86}

],

"scene": {"label": "intersection", "confidence": 0.92},

"notes": "license plate occluded",

"model_version": "vision-v1.3",

"source": "s3://your-bucket/frames/frame-0001.jpg"

}

The coordinator validates this JSON (schema, confidence thresholds, timestamps), groups frames into events, and produces the final narrative.

Sample narrative output

At 10:01:23 two adults entered the east entrance near a delivery truck; one person carried a large box and placed it on the sidewalk. At 10:04:10 a silver sedan parked nearby, the driver exited and walked north toward the storefront—no aggressive behavior detected. (Confidence: 0.89)

Operational considerations, risks & mitigations

- Cost and latency: Large-context LLM calls and high-frequency vision models are the main cost drivers. Mitigation: batch frames, tune sampling rates, and parallelize visual work where suitable. Use AgentCore observability to find hotspots.

- Model caps vs headline specs: Managed services may expose lower practical context windows than vendor headlines. Mitigation: benchmark Scout/Maverick on Bedrock with representative workloads early in the pilot.

- Hallucinations and accuracy drift: LLMs can invent events. Mitigation: require structured evidence (timestamps + frame references), add a verification agent that checks LLM claims against raw JSON, and use human-in-the-loop review for high-risk events.

- Data governance & PII: Video often contains personal data. Mitigation: enforce redaction agents, encryption, strict IAM policies, and short retention windows for raw footage.

- Availability & failure isolation: Agents may fail independently. Mitigation: retry strategies, fallbacks to cheaper models, and flagging for human review when consistency checks fail.

Metrics you should track

- End-to-end latency (upload → summary returned)

- Cost per minute of processed footage and cost per completed job

- Detection accuracy: precision/recall for target event types against a golden dataset

- Hallucination rate: percent of LLM claims that fail schema or evidence checks

- Analyst time saved (minutes saved per hour of footage)

Back‑of‑envelope cost guidance

Exact costs vary by model choice, token usage, and vision model compute. Think of cost drivers as: (1) number of frames analyzed, (2) compute profile of your vision model, (3) token usage for Llama 4 calls (context + output), and (4) storage and data transfer. To estimate, run a single representative job in dev, measure model call durations and token counts via Bedrock logs, then multiply by projected throughput. Use that as a baseline and iterate—sampling and domain-tuned detectors usually give the biggest savings.

Pilot checklist — 30 day plan

- Select 5–10 representative videos (10–60 minutes) and define 3–5 target event types (e.g., trespass, delivery, loitering).

- Implement frame extraction with a sampling strategy (fixed fps or motion-based heuristics) and store frames in S3.

- Deploy a single visual analysis agent (off‑the‑shelf) and ensure outputs match the JSON schema above.

- Run a simple coordinator on Bedrock using Llama 4 Maverick for temporal stitching and summary generation; log tokens and latency.

- Measure KPIs: latency, cost per job, precision/recall against a small labeled set, and analyst time saved in a quick user study.

- Add governance: schema validation agent, PII redaction, IAM controls, and an audit trail in AgentCore.

- Decide next steps: scale parallel visual agents, evaluate Scout for larger context needs, or tune detectors for domain data.

When not to use this

- If sub‑second realtime responses are required (this pattern is batch/near‑real time unless you optimize heavily).

- If data residency or strict privacy rules forbid cloud processing—consider an on‑prem or private‑cloud variant with similar agent orchestration.

- If your use case is tiny and a single multimodal LLM prompt reliably handles it—overhead of orchestration may not justify the return.

Where to start and resources

- Fork the reference demo and agent code: Meta-LLama-on-AWS (sample repo).

- Strands Agents SDK docs and examples: Strands Agents.

- Bedrock and AgentCore documentation for model hosting and observability: Amazon Bedrock.

- Meta Llama 4 announcements and specs: refer to Meta AI materials for Scout and Maverick capabilities.

“Llama 4’s ultralong context windows open possibilities for whole-book or whole-codebase summarization, deep conversation memory, and large cross-document tasks.”

Multi‑agent pipelines are not a silver bullet, but they are a pragmatic architecture for AI automation when production requirements demand specialization, auditability, and incremental upgrades. Keep the pilot small, measure the metrics above, and treat provenance and safety as first‑class features rather than add-ons.

Author: Sebastian Bustillo, Enterprise Solutions Architect at AWS. Connect on LinkedIn.